Media | Articles

Is autonomy ready for the real world?

Engineers, scientists, investors, and philosophers have now generated enough exhaustively researched, extensively footnoted, and assiduously peer-reviewed scribble on the subject of driverless vehicles to fill up one whole medium-size internet. But the stark reality of driverless cars and their current limitations went punching through the darkness early one morning this past July on a suburban San Diego freeway. Somehow, neither the 29-year-old drunk behind the wheel of a white Tesla Model 3 nor the computer she entrusted to drive for her noticed the flashing police lights of a freeway closure. The Tesla barreled past the barricade and rammed into a parked California Highway Patrol cruiser that had responded to an earlier collision.

Miraculously, nobody was killed, but it was only the most recent of 12 cases of Tesla mayhem against public-service vehicles cited by the National Highway Traffic Safety Administration (NHTSA) in launching an investigation of Tesla’s self-driving Autopilot system. As of this writing, the safety agency tallies 17 injuries and one fatality to Tesla Autopilot mishaps in this one narrow subset of crashes with emergency vehicles. In response, Tesla tweeted that cars running on Autopilot in 2020 had an accident rate nearly 10 times lower than the U.S. average (though in a statistically odd pandemic year when miles driven dropped while accidents of all types rose).

Well, plopping robots in the driver’s seat is supposed to be safer. At least, that’s the promise offered by the many companies, lobbying groups, and venture-capital investors pushing autonomous driving against the enormous headwinds of technical and regulatory challenges. Computers don’t drink, they don’t get tired, they don’t get mad, and they don’t check their Instagram at 70 mph. They could make taxi travel a fraction of what it costs today, reduce car ownership and thus congestion, and free up highway travelers to do other things.

“The stuff is coming because of supply and demand,” says Jon Riehl, a University of Wisconsin transportations systems engineer who works on autonomous technology. “There are simply a limited number of people who actually like driving, and I don’t see that changing.”

Despite the rosy predictions of a few years ago, the arrival of computer chauffeurs in any significant numbers remains decades away. And even when they do arrive—and it could still be 30 years before they are a common sight—autonomous cars are unlikely to affect the people who do like to drive, most likely for the life span of anyone reading these words.

Marketplace

Buy and sell classics with confidence

Indeed, the goal of the autonomous test fleets already operating in sunny climates such as Chandler, Arizona, where Google spinoff Waymo circulates automated taxis through that city’s relatively simple and predictable grid of streets, isn’t to replace human-driven Mustangs and Miatas, exactly. It’s to make money, whether through ride-hailing apps, automated delivery vehicles, or other commercial applications. “Three trillion miles are driven [annually] in the U.S. alone,” said Bryan Salesky, the CEO of Argo AI, Ford’s partner in autonomy, on a recent podcast. “So I think that the opportunity is enormous. I think you’re going to see different companies specialize in automating different fractions and slices of those miles.”

However, the forecasted timelines have begun stretching out as realism creeps into the hype surrounding autonomous vehicles. A few cities were supposed to have commercial driverless taxi fleets by 2019, according to several optimistic projections a few years ago, including one by GM’s Cruise subsidiary. “Now the companies are saying ‘sometime in the next 10 years,’” says Richard Windsor, founder of Radio Free Mobile, a tech-research company and investment-advisor firm. “That means they have no clue.”

The future is proving maddeningly elusive to predict as the technology inches from familiar driver aids such as blind-spot detection and lane-keeping assist—the so-called Level 1 and Level 2 tiers of the vehicle autonomy scale established by the Society of Automotive Engineers—to the much trickier Levels 3 and 4, where computers have increasing independence without needing human intervention.

“Frankly, a true Level 5 [total driverless autonomy] is probably a pipe dream. Someday we might get to Level 4.99,” says Wisconsin’s Riehl. He adds that Levels 3 and 4 are still up to 10 years off, maybe even 20 before the tech becomes mainstream. That’s because each step up the autonomy staircase necessitates confounding improvements in artificial intelligence, sensor performance, cost, and communication infrastructure. And each step also comes with its own dangers.

“We call Levels 3 and 4 the ‘messy middle’,” says Riehl, “because there is lots of potential for new types of crashes.” Tesla’s troubles at what some in the industry call “Level 2-plus,” where drivers are still supposed to drive but can take their hands off the wheel under limited circumstances, are a harbinger of the near future, many believe. In the upper levels of autonomy, the computer does more while the increasingly detached (and possibly inebriated) humans do less. But people are still needed, and they will suddenly be called upon to intervene with expert driving skill when the computer blinks off because it can’t deal with fog, freezing rain, or a police barricade. And that’s assuming the computer even knows when to hand back the controls, a problem that challenges Tesla’s technology, judging from the recent crashes.

So, what needs to happen to make autonomy safe and affordable, and why isn’t it happening faster?

The tech side requires better artificial intelligence combined with improved perception of the world around the car. A computer brain, like a human brain, sits in a dark, windowless void and is only as good as what it can see of the world and how it interprets that data. Back in 2012, when the first big advances in machine learning were being made, explains Windsor, people thought that in just a few years computers would learn enough to drive. Hence the optimism.

The trouble with today’s artificial intelligence is that it’s all about pattern recognition, and “when you have an environment that is as random and crazy as a road, the AI breaks down,” Windsor says, because the patterns are way too complex and changeable for a computer to manage them. One way to improve the artificial brain might be to develop better software assistance that could help the computer stack its duties into smaller and more manageable tasks. Another is to improve the eyes that the machine relies on to see the world.

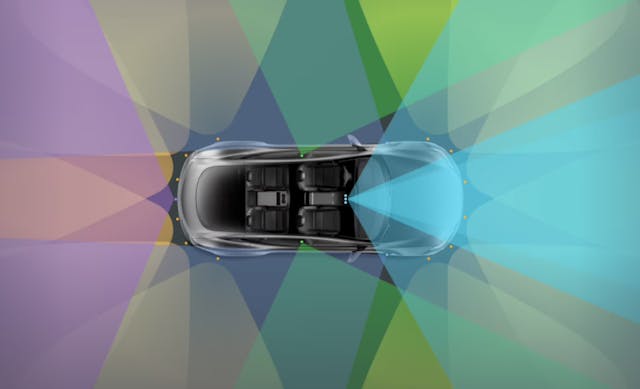

Teslas, with their relatively inexpensive camera-based Autopilot system, lack a conspicuous feature that companies such as Waymo and Cruise have on their autonomous prototypes: a laser radar sensor called a lidar. Shorthand for “light detection and ranging,” the lidar commonly stands out as a coffee can or a beanie on the roof or sides of an autonomous vehicle. Some units are fixed and some spin to create a 360-degree view, the sensor spraying out an invisible blitzkrieg of laser beams that, based on their echo return, create a three-dimensional pattern of the car’s surroundings. Lidar literally reduces the world to digital patterns. Compared to a camera, its data is far more digestible for a black box trying to determine if, say, an object crossing a road ahead is a human or animal—and whether it is walking, running, or riding a bicycle, and if its path is perpendicular to the car’s or at an angle.

Waymo has studded its fleet of Jaguar I-Pace electric prototypes with more than 30 sensors, including a rotating lidar on the roof and fixed lidar units at the car’s corners for seeing around objects such as parked cars. The problem is cost; what a human does instinctively with a pair of eyeballs and some cheap mirrors takes tens of thousands of dollars in sensor equipment.

The cost of lidar is dropping and may soon slip under $1000 per unit, notes Windsor, but in five years, it may still cost $6000–$7000 to outfit a car with the requisite equipment (GM currently charges up to $6150 to equip a Cadillac with Super Cruise, a “Level 2-plus” system with restrictive use limitations). While an individual buyer might balk at the premium, to taxi and other ride-service providers, the cost is acceptable considering that the most expensive component in any vehicle is the driver.

Which is why the autonomous industry is focusing so heavily on the taxi business right now. In Manhattan, taxi fares run as much as $10 a mile. Autonomous taxi operators hope to eventually drive their rates down to 25 to 35 cents a mile by removing the driver, who may go to a remote location where he or she could manage several autonomous taxis at once (that Level 4.99 thing). At that point, “many people will stop owning cars,” predicts Windsor, pushing even more of the nation’s transportation spending toward the autonomous industry. However, that won’t happen on a widespread basis, he believes, until the 2030s or 2040s, and profitability is an open question considering that the competition will be vicious once autonomous technology is a cheap commodity.

Some experts say that the full potential for autonomous vehicles will only be realized once cars join communication networks that allow them to “see” beyond their immediate surroundings. And by “see,” we really mean “hear,” as in the car’s ability to hear the radio calls of other cars reporting their location, speed, and direction, as well as the radio calls of infrastructure such as traffic signals and even the individual smartphones of pedestrians. Vehicle-to-vehicle, vehicle-to-infrastructure, or so-called “vehicle-to-everything” communication would allow even today’s cars to adopt more safety enhancements such as a warning when a car approaching from an intersecting street is about to run a red light. But at the moment, networked cars are just a future vision as governments, telecom companies, automakers, software suppliers, and other interested parties argue over how this communication will get done.

One part of the dispute centers on the hardware. For years, the auto industry has been testing networked autonomous vehicles with an older but reliable form of wireless communication format called dedicated short-range communication (DSRC). A much newer and better type of communication called cellular vehicle-to-everything (C-V2X) has been developed that is based on the same type of technology used in today’s smartphones, but it is still experimental. The former offers low cost, familiarity, and ready availability; the latter offers better communication using a globally adopted format that has clear upgrade paths in 4G, 5G, and so on.

Besides that dispute, there is the battle over available frequencies on the radio spectrum. Radios, including everything from your smartphone to your Wi-Fi modem to your future autonomous car, operate by sending electromagnetic radiation in the form of carrier waves. To avoid overlap and signal noise, each type of radio uses a different wave frequency. The spectrum of these frequencies is limited by technology and physics, and it’s the job of the Federal Communications Commission (FCC) to determine what radios use what frequencies.

About 20 years ago, the FCC selected a frequency range around 5.9 gigahertz for “intelligent transportation systems,” including autonomous vehicle applications. It was chosen in part because the relatively high frequency would allow more data to be transmitted and would also be better for reducing what the telecom industry calls “latency,” or the delay in the wireless transmission of data from the source to the destination. Low latency is considered vital for autonomous vehicles, because if a car that is five cars ahead of you in a 70-mph platoon nails the brakes, your car would need to know it within one- or two-tenths of a second to do any good.

However, automakers and autonomous technology suppliers haven’t really moved into their designated frequency band as the tech is still being born, so it has sat largely empty and fairly quiet. Meanwhile, the frequencies on either side, at 5.8 and 6.0 gigahertz, are bursting at their seams. Both are dedicated to wireless networking, or Wi-Fi, a technology that has boomed during the same period and even more so since the pandemic and the national movement to work from home. Demand for Wi-Fi spectrum has been fierce, and thus last year, the FCC let the Wi-Fi party kick in the door and take over 60 percent of the bandwidth that had been reserved for networked transportation. The Commission’s ruling, published in May 2021, came with unusually pointed language: vehicle-to-vehicle communication, it said, “has not been widely deployed, potential future advanced applications are still under development and have not been deployed, and widespread commercial deployment would at best still be years away, if it occurs at all.”

The FCC’s ruling, now being contested in court, was a blow to advocates of networked vehicles, signaling to participants and investors alike that the government has low confidence that networked autonomous cars will be here anytime soon. The remaining 40 percent of the spectrum could quickly max out and suffer “channel saturation” if NHTSA suddenly ordered that every new car have vehicle-to-vehicle communication, notes Douglas Gettman, global director of Smart Mobility and AV/AC Consulting Services at Kimley-Horn, a public and private infrastructure consultancy in Phoenix.

“Since NHTSA hasn’t mandated that C-V2X be installed [in new cars], we’re in the same situation as we were seven to 10 years ago with blind-spot warning, forward-collision warning, and lane-departure warning systems,” says Gettman. “Yes, you could get a car with those features back then, but only in premium packages. Now almost every vehicle except the most basic models has those features. That is my expectation with vehicle-to-vehicle radios without a mandate; yes, the OEMs will install them, but it will be a very slow rollout.”

All of which means that fully automated cars and a base on Mars are roughly on the same hazy forecast schedule. And Tesla has shown us what happens when a Silicon Valley start-up tries to speed things up by applying a tech disruptor’s urgency to the otherwise glacially cautious auto industry. However, much more is at stake than a fritzy smartphone app when Tesla’s Autopilot fails (many observers feel the Autopilot name itself is a tragic misnomer for a flawed and incomplete self-driving system). Basically, Tesla has enlisted a 765,000-strong army of owners to be beta testers with their bodies, and everyone else’s, for the furtherance of autonomous driving technology. “It’s enough already,” lamented a police officer responding to the scene of a Texas Tesla crash.

Will missteps by Tesla or other pioneers sour the whole world on autonomous cars? It seems unlikely, and the biggest threat to driverless technology may not be from Tesla but Zoom, the online videoconferencing app, as well as Amazon, DoorDash, and other companies that save you the trouble of even leaving your house. Remember that FCC ruling that hands much of intelligent transportation’s bandwidth over to Wi-Fi? Changes in communication technology are rapidly outpacing those in transportation technology. Bloomberg recently reported that a survey of large companies around the world revealed that most plan to permanently slash their travel budgets post-pandemic because of improvements in digital communication and imperatives to reduce carbon emissions. The FCC was only yielding to reality, which is that rather than travel, people increasingly prefer to work, socialize, and shop without ever leaving home.